Our earlier experiments derived some of the darker statistics of the Voynich Manuscript supporting the conjecture, but not erasing all doubt, that the manuscript’s cryptic graphemes are drawn from some natural, or shudderingly unnatural, language.

Despite our beliefs regarding its authenticity, however, the statistical tools we have employed so far can tell us little about the structure, and almost nothing of the meaning, of the Voynich Manuscript. In this post, whilst shying away from the madness and confusion of attempting to translate MS 408, or of definitively identifying its language, we will delve into the extent to which modern natural language processing techniques can reveal its lesser secrets.

The mechanisms we will apply in this post are drawn from the world of topic modelling, an approach widely used in the processing of human language to identify eerily related documents within a corpus of text.

Topic modelling, in its most widely used form, lies in considering each given document as a nebulous admixture of unseen and unknowable topics. These topics, in effect, are themselves probability distributions of words that are likely to occur together. Each document, therefore, is characterised as a set of probability distributions that generate the observed words. This approach, known as Latent Dirichlet Allocation, dispassionately extracts the hidden structure of documents by deriving these underlying distributions.

For known languages, latent Dirichlet allocation extrudes a set of topics characterised by the high-probability words that they generate. These, in turn, can be subjected to human interpretation to identify the semantic underpinnings behind the topics.

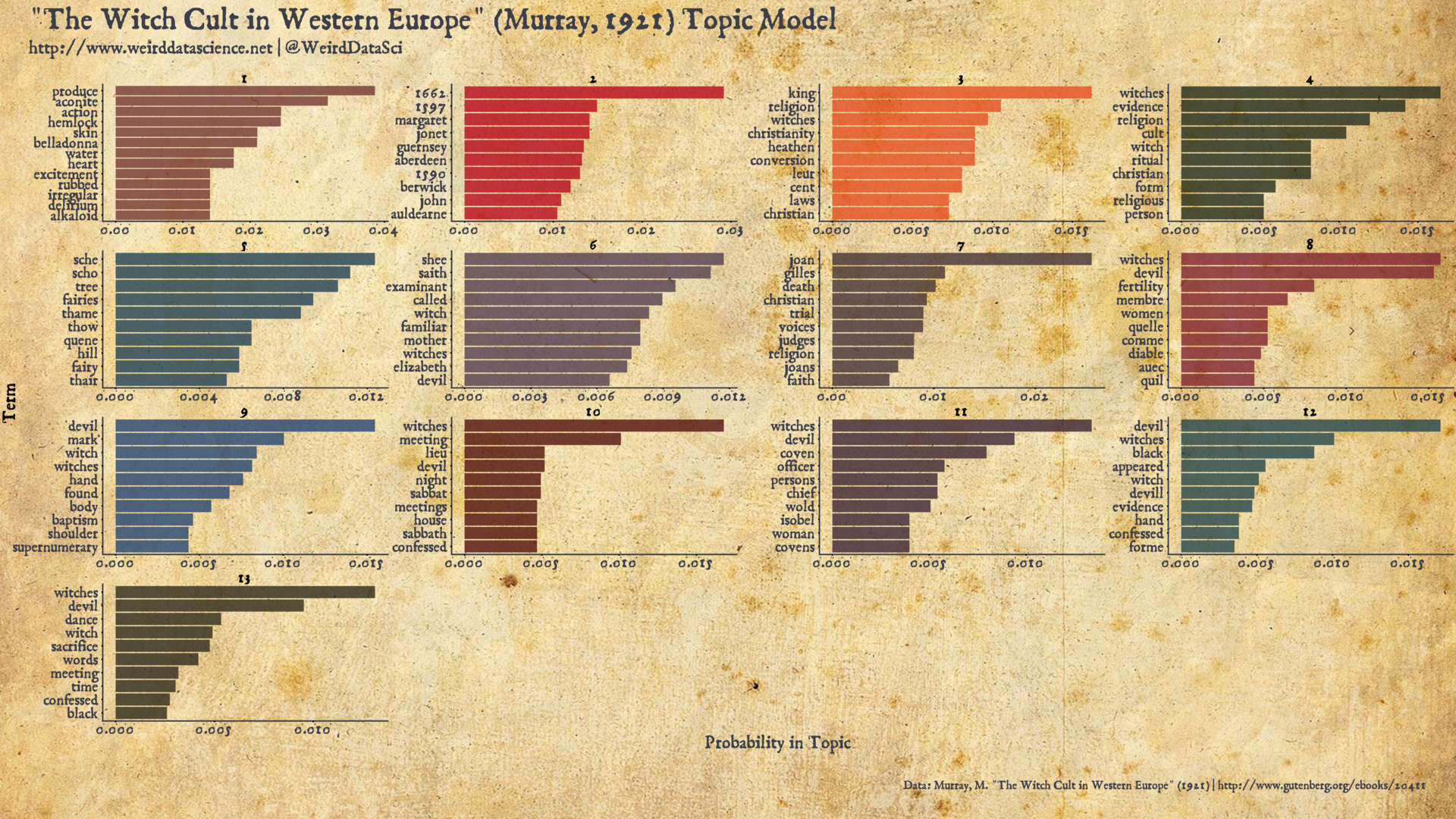

To illustrate, we present a topic model of Margaret A. Murray’s seminal 1921 work “The Witch Cult in Western Europe”. There are many uneasy subtleties in producing such a model, into which we will not plunge at this early stage; at a quick glance, however, we can see that from Murray’s detailed research and interweaved arguments for a modern-day survival of an ancient witch cult in Europe, the algorithm can identify certain prevalent themes. The third topic, for example, appears to conjure terms related to the conflict between the accepted state religion and the ‘heathen’ witch cult. The ninth topic concerns itself with the witches’ marks, supposedly identified on the body of practitioners; while the tenth dwells on the clandestine meetings and sabbaths of the cult.

As the plot above suggests, topic modelling is a tool to support our limited human understanding rather than a cold, mechanical source of objectivity and, as with much unsupervised machine learning, there are various subjective choices that must be made guided by the intended purpose of the analysis. Drawing together impercetible threads of relation in bodies of text, the approach suggests major themes and, crucially, can associate disparate areas of text that focus on similar concerns.

Topical Remedies

What, then, can we learn by bringing the oppressive weight of latent Dirichlet allocation to bear against a cryptic tome whose words, and indeed letters, resist our best efforts at interpretation?

Without understanding of individual words, we wil be unable to glean the semantic understanding of topics that was possible with Murray’s Witch Cult…. There is a chance, however, that the topic model can derive relations between separated sections of the manuscript — do certain early pages demonstrate a particular textual relationship to later pages? Do sections of the overall manuscript retain an apparent coherence of topics, with contiguous pages being drawn from a small range of similar topics? Which Voynich words fall under similar topics?

Preparations

Topic modelling typically requires text to undergo a certain level of formulaic preparation. The most common of such rituals are stemming, lemmatization, and stopword removal. Briefly, stemming and lemmatization aim to reduce confusion by rendering words to their purest essence. Stemming is a more crude heuristic, unsympathetically incising endings, and so truncating “dark”, “darker”, “darkest” simply to the atomic root word “dark”. Lemmatization requires more understanding, untangling parts of speech and context: that to curse is a verb while a curse is a noun; the two identical words should therefore be treated separately.

Stopword removal aims to remove the overwhelming proportion of shorter, structural words that are ubiquitous throughout any text, but are largely irrelevant to the overall topic: the, and, were, it, they, but, if…. Whilst key to our understanding of texts, these terms have no significance to the theme or argument of a text.

Undermining our scheme to perform topic modelling, therefore, is the lamentable fact that, without understanding of either the text or its structure, we are largely unable to perform any of these tasks satisfactorily. We have neither an understanding of the grammatical form of Voynich words allowing stemming or lemmatization, or a list of stopwords to excise.

Whilst stemming and lemmatization are unapproachable, at least within the confines of this post, we can effect a crude form of stopword removal through use of a common frequency analysis of the text. Stopwords are, in general, those words that are both most-frequently occuring in some corpus of documents and those that are found across the majority of documents in that language. The second criterion ensures that words occurring frequently in obscure and specialised texts are not considered of undue importance.

This overall statistic is known as term frequency-inverse document frequency, or tf-idf, and is widely used in information retrieval to identify terms of specific interest within certain documents that are not shared by the wider corpus. For our purposes, we wish to identify and elide those ubiquitous, frequent terms that occur across the entire corpus. To do so, given our lack of knowledge of the structure of the Voynich Manuscript, we will consider each folio as a separate document, and consider only the inverse document frequency as we are uninterested in how common a word within each document. To avoid words that most commonly appear across the manuscript, with a basis in the distribution of stop words in a range of known languages, we therefore remove the 200 words with lowest inverse document frequency scores1.

Having contorted the text into an appropriate form for analysis, we can begin the process of discerning its inner secrets. Our code relies on the tidytext and stm packages, allowing for easy manipulation of document structure and topic models2

Numerous Interpretations

Topic models are a cautionary example of recklessly unsupervised machine learning. As with most such approaches, there are a number of subjective choices to be made that affect the outcome. Perhaps the most influential is the selection of the number of topics that the model should generate. Whilst some approaches have been suggested to derive this number purely by analysis, in most cases it remains in the domain of the human supplicant. Typically, the number of topics is guided both by the structure of the text along with whatever arcane purpose the analysis might have. With our imposed lack of understanding, however, we must rely solely on crude metrics to make this most crucial of choices.

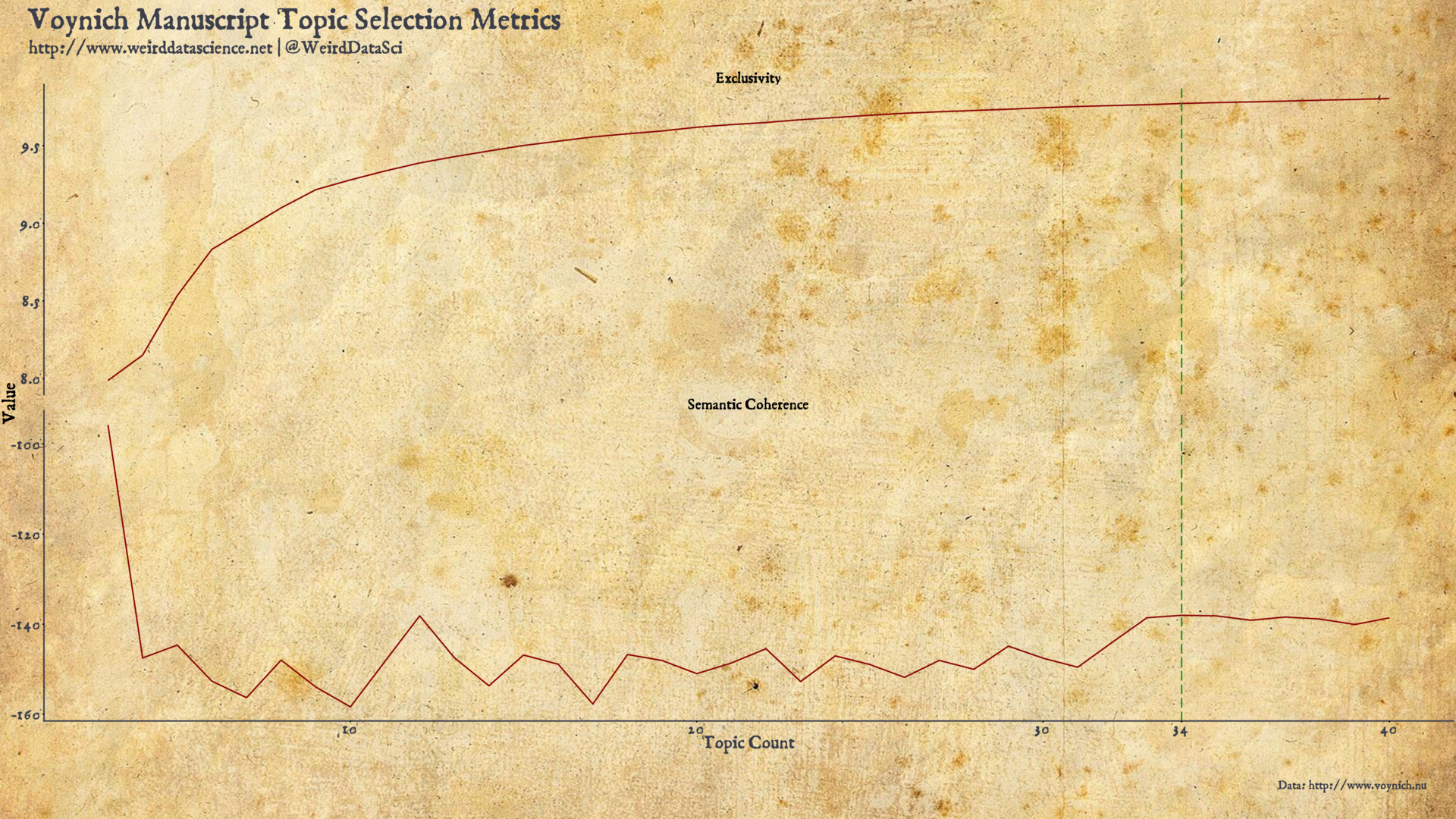

Several methods of assessment exist to quantify the fit of a topic model to the text. The two that we will employ, guided by the stm package are semantic coherence, which roughly expresses that words from a given topic should co-occur within a document; and exclusivity, which values models more highly when given words occur within topics with high frequency, but are also relatively exclusive to those topics.

We select an optimal number of topics by the simple process of calculating models with varying numbers of topics, and assessing when these two scores are maximised. For the Voynich Manuscript we observe that 34 topics appears to be initially optimal3.

The initial preparation of the code, the search through topic models of varying numbers, and the selection of the final 34 topic model is given in the code below alongside plotting code for the metrics diagram.

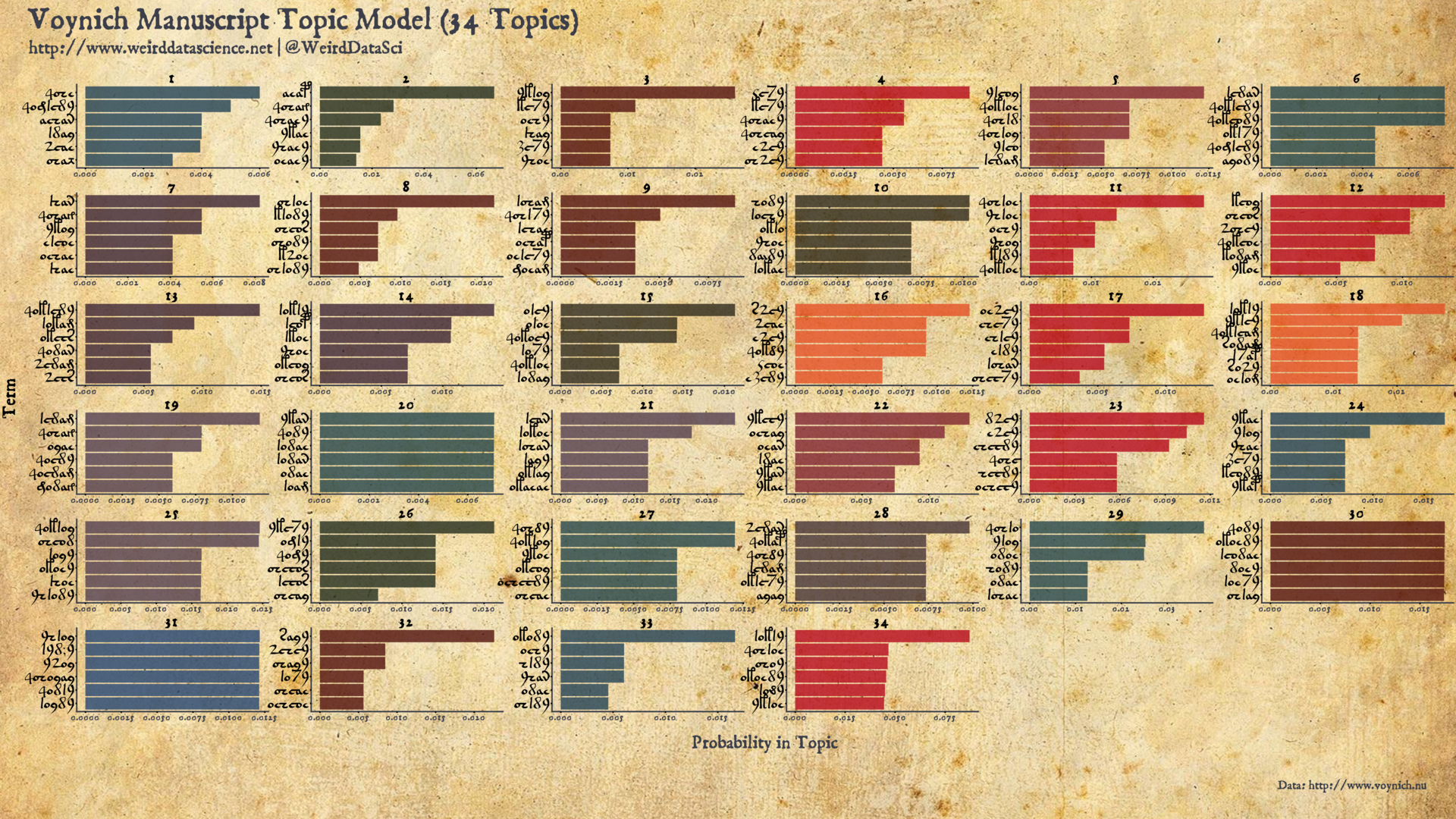

With these torturous steps on our path finally trodden, our path leads at last to a model deriving the underlying word generating probabilities of the Voynich Manuscript. In each facet, the highest-probability words in each topic are shown in order.

Of Man and Machine

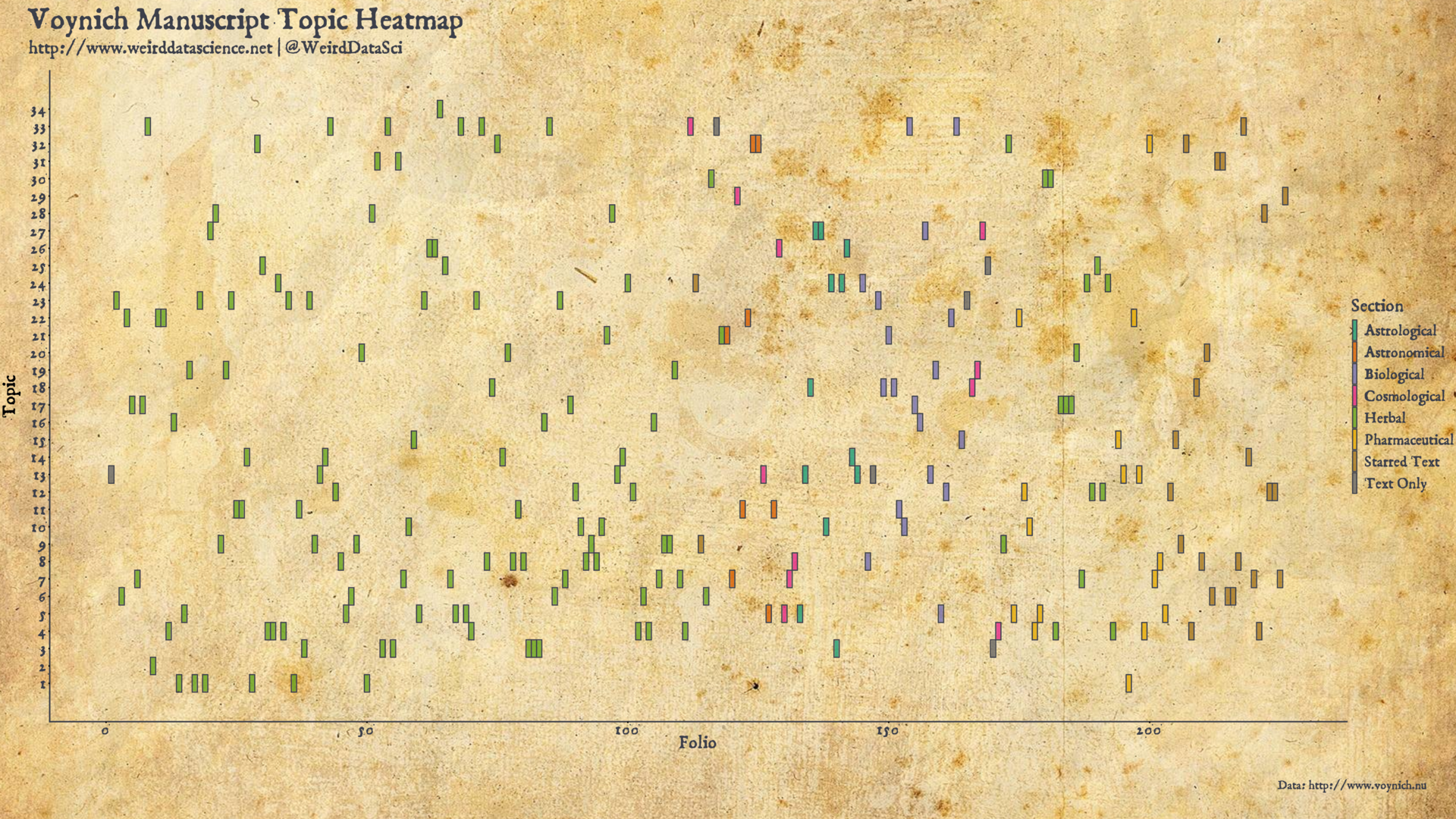

The topic model produces a set of topics in the form of probability distributions generating words. The association of each topic to a folio in the Voynich Manuscript represents these probabilistic assignments based solely on the distribution of words in the text. There is a secondary topic identification, however, tentatively proposed by scholars of the manuscript. The obscure diagrams decorating almost every folio provide their own startling implications as to the themes detailed in the undeciphered prose.

We might wish to ask, then: do the topic assignments generated by the machine reflect the human intepretation? To what extent do pages decorated with herbal illuminations follow certain machine-identified topics compared with those assigned to astronomical charts?

The illustration-based thematic sections of the Voynich Manuscript fall into eight broad categories, according to Zandbergen. These sections are, briefly:

- Herbal, detailing a range of unidentified plants, comprising most of the first half of the manuscript;

- astronomical, focusing on stars, planets, and astronomical symbols;

- cosmological, displaying obscure circular diagrams of a similar form to the astronomical;

- astrological, in which small humans are displayed mostly in circular diagrams alongside zodiac signs;

- biological, characterised by small drawings of human figures, often connected by tubes;

- pharmaceutical, detailing parts of plants and vessels for their preparation;

- starred text, divided into short paragraphs marked with a star, with no other illustrations; and

- text only pages.

With these contextual descriptions, we can examine the relationship between the speculative assignments of the topic model against the suggestions of the diagrams.

The colours in the above plot represent the manual human interpretation, whilst the location on the y-axis shows the latent Dirichlet allocation topic assignment.

We might have harboured the fragile hope that such a diagram would have demonstrated a clear confirmatory delineation between the sectional diagrammatic breakdown of the Voynich Manuscript. At a first inspection, however, the topics identified by the analysis appear almost uniformly distributed across the pages of the manuscript.

The topic model admits to a number of assumptions, not least the selection of stopwords through to the number of topics in the model. We must also be cautious: the apparent distribution of topics over the various sections may be deceptive. For the moment, we can present this initial topic model as a faltering first step in our descent into the hidden structures of the Voynich Manuscript. The next, and final, post in this series will develop both the statistical features and the topic model towards a firmer understanding of whether the apparent shift in theme suggest by the illustrations is statistically supported by the text.

Until then, read deeply but do not trust what you read.

Footnotes

- The widely-used NLTK stopword corpus contains a list of stopwords for 23 world languages, with a notable bias towards European languages. The median length of these stopword lists is 201.5, with values ranging from 53 for Turkish to 1784 for Slovene.

- We have also relied extensively on the superb work and writing of Silge on this topic.

- It should be noted that topic modelling is more typically applied to much larger corpora of text than is possible with our restriction to the Voynich Manuscript. Given the relatively short nature of the text, we might prefer to focus on a smaller number of topics. The metrics plot shows a spike in semantic coherence around 12 topics that might be of interest in future analyses.

Be the first to comment

This site uses User Verification plugin to reduce spam. See how your comment data is processed.